Shifting to an AI mindset

Why building products will never be the same

One of my purest pleasures is hiking the hills above Los Angeles. On my typical route, I get 360 degree views encompassing downtown LA, the sweeping coastline, and the low slung mountains surrounding LA county.

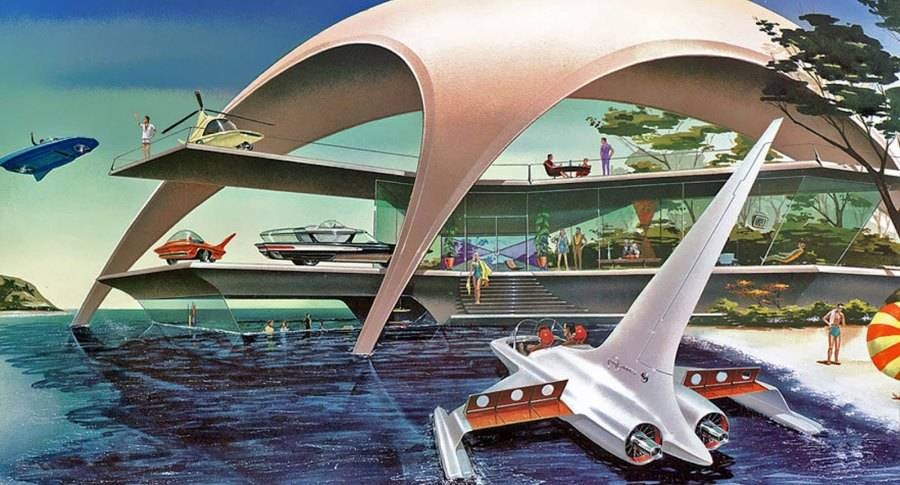

Remarkably, this view doesn't look so different than it did 75 years ago — the mid-century when people imagined a space-aged future. There are no flying cars, no skyscrapers reaching into the atmosphere, and no Blade Runner-esque urban sprawl.

Yet, things have changed profoundly. Almost all of those changes are in the area of information technology. In bits, not atoms.

These changes have happened in three stages, and now we are in the fourth.

A brief history of information technology

About ten years ago, an xkcd comic came out:

That comic was true for decades, but no more. Today, detecting a bird in a photo wouldn’t take more than few hours either. So what changed?

Stage 1: The Computation Era

In the 1940’s, the invention of the transistor ushered in an age of computation. We can think of computation very simply in terms of three parts; an input, a set of rules, and an output.

Our input can be as simple as two numbers, and our rule can be as simple as adding those numbers together. Given an input and a set of rules, computation delivers an output.

The magic of our lifetimes has been that this simple concept has scaled exponentially. Every second, today’s computers can run trillions of rules on trillions of inputs to give us remarkable outputs. A game like Forza Motorsport can render, 60 times per second, a scene that would have taken hours a decade ago.

Stage 2: The Networking Era

By the 1980’s, this explosion in computational power led to the personal computer revolution — a computer on every desk and in every home. Word processing, spreadsheets, databases, desktop publishing, and more provided a boom in productivity.

Yet, these early PC’s had a massive limitation. They couldn’t talk to each other. Despite networking initially beginning in the 1970’s, it wasn’t until the mid-1990’s when the Internet became fast enough and easy enough for the average PC-owner to use.

That’s when things really took off. The early winners of the PC revolution, Microsoft Dell, and Intel, got challenged by Internet-native companies like Google, Facebook, and Amazon.

Stage 3: The Ubiquity Era

In the early 2000’s, we were reaping the benefits of powerful connected computers. The dot-com era led to generational successes, like Amazon, but also some surprising failures like Webvan (grocery delivery before Instacart), Kozmo (one-hour delivery before DoorDash and Gopuff), and Pets.com (pet delivery before Chewy).

What happened? The connected PC’s of that era had a major limitation — they were big, power hungry, and tethered to our desks. As in the past, a pivotal technology was emerging: not just mobile phones, but ubiquitous devices defined by low cost, low energy consumption, and computational power increasing with Moore’s law.

This led to a new wave of pillar companies that could not have existed before. Uber took advantage of the fact that riders and drivers always have a location-aware, connected device nearby. Delivery startups found the product-market fit that was missing in the Web 1.0 era. Facebook, TikTok, YouTube, and Netflix took advantage of the hours of daily screen attention that materialized with a streaming device in every pocket.

Stage 4: The Learning Era

Computation, networking, and ubiquity — these three elemental innovations have combined to make extraordinary things possible. Each opened a door to new possibilities.

But, some things have remained out of reach.

Computation has a fundamental constraint. We can only create that which we can express. Let’s go back to our xkcd comic:

The first product requirement, “when a user takes a photo, check whether they are in a national park”, is a computational problem. We can project all the points on earth onto a 2D plane, create a polygon representing the area of all national parks, and then check if a particular GPS location is within that polygon. We can clearly express the rules that turn an input (the user’s GPS location) into an output (whether they are in a national park).

The second product requirement, “check whether a bird is in the photo”, is different. We can’t readily express the rules that people use to figure out if a bird is in a photo. There are too many variations in shape, color, posture, camera angle, etc. to code into a ruleset. Go ahead, try it.

Over a lifetime, our brain has learned how to detect a bird in an instant, but we can’t explain how. Detecting birds is a learning problem, not a computational problem.

Since the earliest days, computer scientists have been working on how to teach machines to learn. That involves turning computation inside out. Instead of generating outputs from inputs and rules, we need to generate rules from inputs and outputs.

In the Learning Era, we are no longer limited by what we can express in code. Given a sufficient amount of data — for example photos tagged with whether or not they contain a bird — an AI model can learn how to detect birds in nearly any photo.

Even more importantly, AI models can be stacked on top of each other and combined with computational approaches to make extraordinary products that can work in magical ways. The capability to detect a bird — or any other object — has led to image and video generation. Quickly, machine learning evolved from understanding images to making them.

The same evolution is happening in LLMs. Language understanding led to language generation which is now leading to chain of thought and language-based reasoning.

Now what?

As product builders, we’re working in a new paradigm — and we need to be careful about falling prey to the hype:

In fact, we’re already starting to see some of the disillusionment setting in from unimaginative uses of AI. Too many companies have dropped RAG-enabled chatbots and useless generators into their products, and too many investors have counted AI birds before they’ve hatched. I think 2025 is going to be a year of reckoning, but skepticism around AI will be misplaced.

Yes, AI is overhyped, but it will not be like VR or crypto. AI lifts a constraint that was ironclad for decades. We’re not longer limited by what we can code.

Consider just one area: video editing. Today, we can already edit video as if it were still a script — an achievement made possible by Learning Era advances in speech-to-text and voice generation. Tomorrow, we’ll be able to edit videos as if we were still behind the camera by zooming, panning, and extending clips with video generation models that can predict whats outside the frame.

This will be possible by snapping learning and computational approaches together like LEGO blocks. As product builders, we have capabilities that were impossible just a few years ago:

Real-time text classification and sentiment detection

Summarization

Semantic search

Prompted generation of text, images, and video

Retrieval augmented generation

Chatbots and assistants

Predictive autocomplete / copilots

Autonomous agents

Editing, iteration, and variant generation on text, images, and video

Perspective changes (panning, zooming) on images an video

Convincing speech generation and music generation

Dynamically generated UIs

and many more…

There will be very few product features that aren’t possible — given enough time and data. We can truly solve our customer’s problems without limitations.

As you build features, it’s important to shift to this new perspective. Two questions can help. When working on a new product or feature, ask:

Is this a computational problem or a learning problem? In other words, can you express the rules to solve the problem or do you need to train a model to learn rules you can’t express?

If it’s a learning problem, does the intelligence already exist to solve that problem or will you create it from available data?

These questions can help you determine the right approach, select the right model, or frame the research necessary to get to the next step.

AI takes the training wheels off the information age. Where the story goes is impossible to predict — but I can’t wait to find out.

What will you build in the Learning Era?

Is forecasting computation problem or learning problem?

An excellent cardinal question that can fuel AI design sprints. Thankyou for writing this!