Tool Augmented Generation: 2025 is the year AI learns to use your product

As a product builder, you need to stay ahead of the AI curve. In this post, I discuss where AI is going and how to keep your product at the frontier.

This post is brought to you by you.com — access 20+ AI models in one, centralized easy-to-use AI chat platform. As a “Ravi on Product” subscriber, get 6 months free of you.com Pro ($120 value). I often find myself going between ChatGPT and Claude when working on tough tasks. With you.com, you can interact with different models in the same thread—getting different angles/opinions. It's like having your own personal board of advisors.

I hate expense reports. I hate them so much, I let things pile up. But, that just makes things worse.

Yesterday, I reached my limit. The last thing I wanted to do was rummage through a folder full of receipts to pull together an expense report.

So, I didn’t.

In an act of desperation, I dragged all the files into ChatGPT and asked it to create the report.

I didn’t expect it to work. Large language models aren’t great at math, and how exactly was it going to make sense of PDFs, photos of crinkled paper, a printed itinerary, and a note-to-self for a receipt that had found a better home?

I held my breath as ChatGPT chugged away.

A minute later, I had a finished expense report, downloadable as a .CSV.

In one sense, the fact that advanced AI was able to perform a rudimentary clerical task isn't so surprising. Yet, my ask goes against what LLMs are built to do. They aren't designed for the simple arithmetic I asked for, nor are they designed to make sense of the mish-mash of files I dumped on it.

So how was ChatGPT able to complete this task? By using the tools at its disposal:

File uploading

Optical character recognition to “read” the receipts

Code generation to compute the total

File generation to make a downloadable .CSV file

And the LLM itself to understand the request & orchestrate all of the pieces

In 2025, the tools available to LLMs are going to grow exponentially.

From RAG to TAG: The Evolution of Gen AI

Large language models have two fundamental limitations:

They are trained on a fixed set of data — their “long-term memory” is vast, but frozen in time

They are trained to do only one thing — generate language by predicting the next word

Let’s look at how these limitations are getting lifted.

Making the most of AI’s “working memory”

LLMs, much like people, have both a long-term memory and a working memory. Their working memory is defined by their "context window" and their long-term memory is defined by their training data. Think of it like the difference between everything you've ever learned (long-term memory) versus what you can actively think about right now (working memory).

Put simply, an LLM’s goal is to generate content based on what’s in its context window, falling back on training data when necessary.

“Prompt engineering” is really just about stuffing that context window with the information and instructions that will give us a good output. A bad prompt doesn’t give the LLM much to work with. So, we’ll get an answer, but that answer will be based on what the LLM plucks (somewhat at random) from its training data. As a result, output quality will be unpredictable and prone to hallucination. A good prompt, on the other hand, focuses the LLM on a set of clear instructions and relevant information.

Context windows have grown rapidly. The latest models have context windows of 128,000+ tokens which is equivalent to a full length book. Longer context windows open up a massive opportunity — we can pull information from external sources into the context window. In other words, we can augment our prompts by retrieving information from (i.e., Retrieval Augmented Generation):

file uploads (i.e., chat with a PDF)

web search (i.e., Perplexity and ChatGPT search)

vector databases (i.e., Glean and other knowledge base products)

and any other source of info within our products

RAG = Your company’s data + AI

In 2024, the power of Retrieval Augmented Generation (RAG) was fully realized as product builders tapped into longer context windows. Instead of relying on training data frozen in time, RAG brings fresh, relevant data into the context window and, as a result, enables AI products that leverage a company’s data sources.

For example, I recently hosted a panel with Jeff Wang, Co-Founder of Amplitude. He discussed Amplitude’s approach to Ask Amplitude, their integrated chat feature. That feature uses RAG to pull in customer data when answering a user’s question. Now, Amplitude users can quickly generate insights by “talking with” their product’s data.

In a fast moving AI landscape, this is exactly the right approach to establish competitive advantage. Instead of reinventing the LLM, pair the best models with content that is uniquely yours.

So, RAG enables companies to integrate their content & data into AI. But, for most companies, that scratches the surface of the value they create for customers. Much of that value is locked into the functionality they provide — Uber enabling rides, Bill.com enabling invoice creation, Stripe enabling payments, and millions more use cases.

That’s where Tool Augmented Generation (TAG) comes in.

TAG = Your company’s functionality + AI

LLMs are trained to do one thing. They generate language and they do that very well. They can generate English, countless spoken languages, as well as computer code in languages like Python.

LLMs are not general purpose computers, and they are pretty bad at many things computers are great at. For example, LLMs struggle with simple arithmetic. Unaided, LLM’s can appear to do math, but this is only because they are recognizing patterns in language. They have seen the phrase 2 + 2 = 4 often enough that they know it to be true — not because of mathematical truth, but because they recognize that pattern in our language.

Okay, so large language models are just word prediction machines. How then do they seem so intelligent? In learning to predict the next word in a Shakespeare play or the next line in a Python script, LLMs stumbled upon something remarkable: they learned to mimic the way humans structure thoughts and solve problems.

As thinking humans, our power comes from using tools. Likewise, as LLMs improve, their power will also come from using tools — tools that enable them to achieve things far beyond language generation.

We already see this in the small, but powerful set of tools that ChatGPT and Claude have access to. For example, ChatGPT often generates code to handle requests that involve math or data analysis.

Let’s look at an example:

In this case, I’ve uploaded a .CSV file containing responses to a recent NPS survey and I’ve asked ChatGPT to calculate the NPS of the results. In order to that, notice how ChatGPT explains its approach. This is useful both for the user, but it also seeds the LLM’s context window with the instructions necessary to calculate NPS accurately.

Then, ChatGPT generates code to perform the calculation:

# Extracting the relevant column

nps_scores = survey_data['How likely are you to recommend Spotify to a friend?']

# Categorizing responses into Promoters, Passives, and Detractors

promoters = nps_scores[(nps_scores == 9) | (nps_scores == 10)]

passives = nps_scores[(nps_scores == 7) | (nps_scores == 8)]

detractors = nps_scores[nps_scores <= 6]

# Calculating counts

total_responses = len(nps_scores)

promoters_count = len(promoters)

detractors_count = len(detractors)

# Calculating NPS

nps_score = ((promoters_count - detractors_count) / total_responses) * 100

nps_scoreSo, ChatGPT took a multi-step approach to answering my question that involved using tools:

LLM: Understand the intent of “What is the NPS score of these survey responses?”

LLM: Explain the approach for calculating the NPS score.

Code Generation: Generate code to calculate NPS.

File Upload: Load the survey results.

Code Interpreter: Run the generated code.

LLM: Output the NPS score and explain it.

Large language models have other tools available. Perplexity makes ample use of the ability to search the web. Both ChatGPT and Claude have the ability to search files and find relevant content. Claude recently unveiled computer use that enables the model to “use computers the way people do — by looking at a screen, moving a cursor, clicking buttons, and typing text.”

One tool to rule them all

There is one tool to rule them all — function calling. Function calling enables you to make your product’s functionality available to the LLM.

Want to go deeper than this article? Learn more about function calling in OpenAI’s and Claude’s API documentation.

Function calling is surprising simple to use. Start by providing a menu of functions you want the LLM to consider using when answering questions. Each function is defined using a schema like this:

{

"name": "get_delivery_date",

"description": "Get the delivery date for a customer's order. Call this whenever you need to know the delivery date, for example when a customer asks 'Where is my package'",

"parameters": {

"type": "object",

"properties": {

"order_id": {

"type": "string",

"description": "The customer's order ID."

}

},

"required": ["order_id"],

"additionalProperties": false

}

}Interestingly, this schema defines the function using human language. The really important part of the function definition is the description:

Get the delivery date for a customer's order. Call this whenever you need to know the delivery date, for example when a customer asks 'Where is my package'The LLM, because it understands both spoken language and computer language, bridges the two — it orchestrates the calling of functions based on conversation with the user:

Violà! We’ve broken the second limitation of LLMs. They may be trained to do only one thing — to generate language — but now they have a superpower.

They can use tools.

Function calling is not brand spanking new, but it will reach a tipping point in 2025. This delayed adoption is pretty common for AI tech technologies. For example, retrieval augmented generation was first discussed in 2020 but really took off in 2024 due to larger context windows. Similarly, function calling will takeoff in 2025 due to both to larger context windows and improvements in multi-step reasoning.

Now, companies can make both their data (Retrieval Augmented Generation) and their functionality (Tool Augmented Generation) available to AI systems. This requires an important mind shift. Instead of asking “How do I put AI into my product?”, now product builders must also ask “How do I put my product into AI?”.

“How do I put AI into my product?” → “How do I put my product into AI?”

Is Tool Augmented Generation a stopgap?

As LLMs improve — as we get closer to Artificial General Intelligence — will Tool Augmented Generation become obsolete?

I don’t think so.

In Shifting to an AI mindset, I described the two different approaches to building software systems:

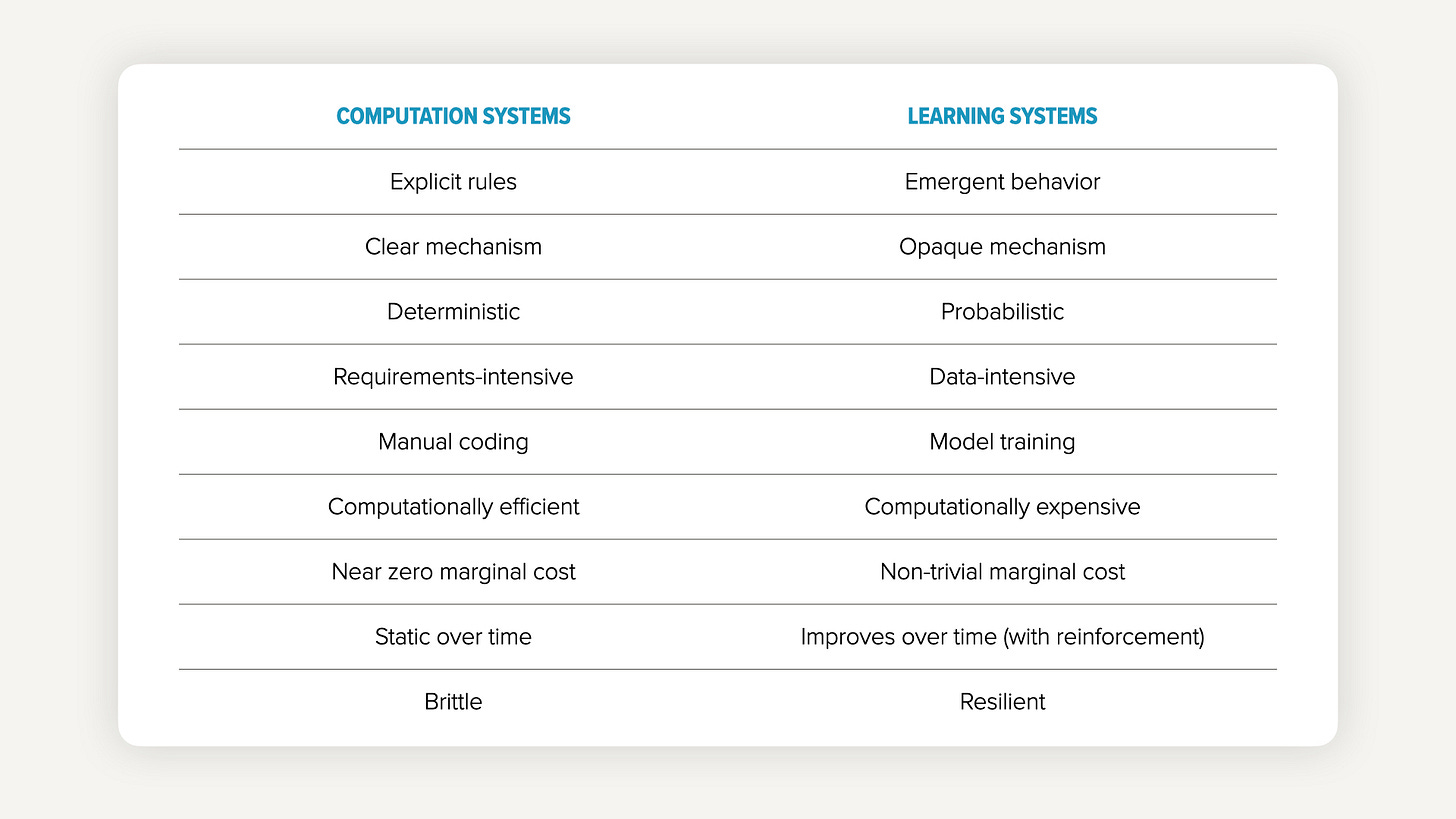

The computational approach uses an input and a set of rules (i.e., computer code) to generate an output. This approach is extremely powerful — typified by photorealistic video games that perform billions of calculations per second to generate convincing game worlds.

The learning approach uses an input and a set of outputs (for example, a massive collection of photos labeled by what subject matter appears in those photos) to train an AI model.

Computation systems and learning systems behave in very different ways and have very different strengths and weaknesses:

The two approaches are complementary. AI won’t eat software — it will use software to do the things outside its domain.

Wait a minute, aren’t agents the future?

Yes, but I think we’re getting ahead of ourselves. Already, the term “agent” has been stretched beyond meaning. A fully realized agent will have three capabilities:

Multi-step reasoning. Agents aren’t limited to the “call and response” interaction model common the chatbots. Instead, agents are able to plan out the multiple step necessary to complete complex tasks. As we’ve seen, the best models are already doing this to a limited extent and OpenAI’s o1 model takes that even further.

Tool use. Agents use tools, just as we’ve discussed, to interact with external systems. They don’t just generate language — they manipulate other software systems to achieve outcomes.

Autonomous orchestration. Early agent platforms often work by defining fixed, linear workflows — they chain together AI actions. This is a powerful approach, but it’s one step removed from the full potential of AI agents. A fully realized agent will be able to work autonomously and pull from a large library of tools to accomplish its work.

Tools are the foundation of high functioning agents, so I believe widespread Tool Augmented Generation is a necessary step towards more agentic AI.

TAG in action

Function calling sounds too good to be true, and I went in skeptically… until I saw what it can do.

I’ve been working on a side project, GPTcsv.ai. As a product manager, I often have spreadsheets full of data that I need to analyze (like surveys, customer support messages, and user reviews, etc.)

GPTcsv.ai is an AI tool dedicated to data analysis — it enables you to “chat with” a dataset the same way that you can use AI chatbots to chat with a PDF or other text document. We used Tool Augmented Generation to surface a set of database functions to the LLM, and we took advantage of the LLM’s ability to a) call those functions as needed and b) write SQL queries.

The LLM does a remarkable job of orchestrating those tools to bridge between human language and the domain of databases. The result is a natural language interface into any dataset.

This is a glimpse into what is possible, and it requires a new way of thinking.

Here's the mindset shift that will separate the winners from the losers: AI isn't just another feature in your product…

AI is your most demanding user.

Want to get started with a more tool-oriented approach to AI?

I recommend trying out you.com. You.com allows you to access 20+ AI models in one, centralized easy-to-use AI chat platform. Moreover, you can use tools like file upload, web search, and custom data integrations.

With you.com, you’ll:

Always have the right model for the right task, with access to the latest models from OpenAI, Anthropic, Google and more

You can interact with different models in the same thread—getting different angles/opinions. It's like having your own personal board of advisors

For example: let's say you're brainstorming new names for a product you are launching—you can ask GPT-4o for ideas, then switch to Sonnet 3.5, to get feedback, and a new perspective.

Until recently, I’ve been switching back and for manually — its a game changer to access multiple models in a singe thread.

As a Ravi on Product subscriber, get 6 months free of you.com Pro ($120 value)

Great post, Ravi.

Perhaps somewhat tangental, but your breakdown of how LLMs use code to bypass their math deficiency reminded me of the concept of Core Math. As a non-parent, I had never heard of this before, but apparently school teach math a completely different way than it used to when I was a kid.

In this new way, math is taught by breaking problems down into easier steps instead of 'remembering' long formulas.

In a roundabout way, this might prepare today's kids to better understand how AI tools like chatgpt etc. handle the math questions in the middle of pure LLM back & forth, as that's pretty much exactly what they do. You present a problem (in human language), LLM understands it, then breaks the math down into actionable chunks and creates simple code to solve it step by step, then responds using LLM.

Quite fun that kids & LLMs are learning the same approach, even though that wasn't really planned!